As AI evolves, its role will deepen, fostering more dynamic relationships between designers and machines. The question is not about choosing between AI as a tool or an instrument but understanding how its dual nature can aid, inspire, and enhance design and planning practices. The research at the SHARE Lab empowers architects and urban designers to leverage AI’s computational power for efficiency-driven tasks and expand exploratory, creative processes with its instrumental application.

The research at SHARE Lab has two objectives:

1. AI as an instrument to empower creativity

2. AI as a tool for social good

Both are particular and convoluted within the umbrella topic of Human-Centered AI.

AI to Empower Creativity

______

AI to Empower Creativity ______

- The Evolving Role of Architects in the Age of Generative AI- Architecture has experienced a substantial shift toward the mass production of visual content, suggesting that architects are increasingly serving as curators rather than creators. The introduction of Diffusion Models in the field has accelerated this transformation, prompting architects to reconsider their role. Instead of exercising full creative control, they now guide processes shaped by algorithms and data sets. These tools, developed collaboratively by architects, algorithm designers, and data specialists, facilitate remarkable design capabilities yet challenge traditional understandings of authorship and artistic agency. By distributing creative influence more broadly, this approach democratizes certain aspects of architectural production, while also highlighting pressing ethical issues related to data transparency and algorithmic fairness. Moving forward, it is essential for architects to engage thoughtfully with these technologies, ensuring they are used responsibly and equitably. In this evolving landscape, architects must embrace their roles as informed curators, leveraging new tools to enrich rather than diminish the integrity of their work.

-Can Artificial Intelligence Mark the Next Architectural Revolution? Design Exploration in the Realm of Generative Algorithms and Search Engines- This book chapter emphasizes the need for Human-Computer collaboration in order to maximize the potential of AI and not AI as a substitute for human intelligence, critical and creative thinking. The study encourages architects to use AI as a design companion, to increase the complexity layers in design beyond traditional 2D representation. Today, generative algorithms provide a good solution, but there is still opportunity for generative algorithm to incorporate human feedback. The chapter concludes with the assurance that AI is not in competition with designers, rather it enhances and augments design and production capabilities

-Playing Dimensions- This article introduces an innovative architectural design workflow, exploring the convergence of Big Data, Artificial Intelligence (AI), and storytelling. Through scraping, encoding, and mapping data, the approach leverages Virtual Reality (VR) and Augmented Reality (AR). Unlike conventional views that treat AI merely as an optimization tool, this method positions AI as a tool for critical thinking and idea generation, advocating experimentation with existing models in architecture practice.

-Text2Form3D- Is a machine-learning-based design framework that explores descriptive representations of structural forms. It combines a deep neural network algorithm with Combinatorial Equilibrium Modeling (CEM) and is trained using a dataset of structural design options labeled with vocabularies obtained from architectural and structural reports. Text2Form3D can autonomously generate new structural solutions based on user-defined queries and evaluate them using quantitative and qualitative criteria, allowing the designer to refine the design space according to their preferences.

-An AI design methodology for conceptual design- This article highlights the potential of AI in architecture by emphasizing its ability to solve problems, process data, and automate repetitive tasks. Integrating these technologies into design workflows as active tools for creativity and exploration remains a challenge. The article proposes an iterative collaboration between humans and machines within a design studio setting to explore how AI algorithms and data analysis can enhance architectural design exercises focused on placemaking in natural landscapes through small-scale projects.

-Beyond Typologies, Beyond Optimization- This paper introduces a computer-aided design framework that enables the generation of non-standard structural forms in static equilibrium by leveraging the collaboration between human and machine intelligence. The framework utilizes three main algorithms: Combinatorial Equilibrium Modelling for generating structural forms, Self Organizing Map for clustering options, and Gradient Boosted Trees for classifying designs. The proposed framework is validated through a real-world case study involving the design of a stadium roof.

-Multi-Axis Robotic Architectural Detailing- This project focuses on construction techniques at the detail scale, specifically exploring Multi-Axis 3D printing and the integration of Lidar 3D scanning for Generative Digital Design. By utilizing Lidar scanning, accurate point cloud models can be created and compared to the digital design in Computer-Aided Design (CAD) software. This allows for identifying and addressing tolerance issues during the design and construction process. An algorithm in CAD can then generate optimized joints based on user-defined constraints once tolerance deviations have been accounted for.

-Control ControlNet- This research investigates how AI image generators can enhance architectural schematic design workflows. Control images were paired with Stable Diffusion via ControlNet to produce output images that predictably reflect a specific design intent. Varying levels of detail (LOD) were established for both the schematic sketch and text prompts in order to examine the impact of visual and linguistic specificity on the final output.

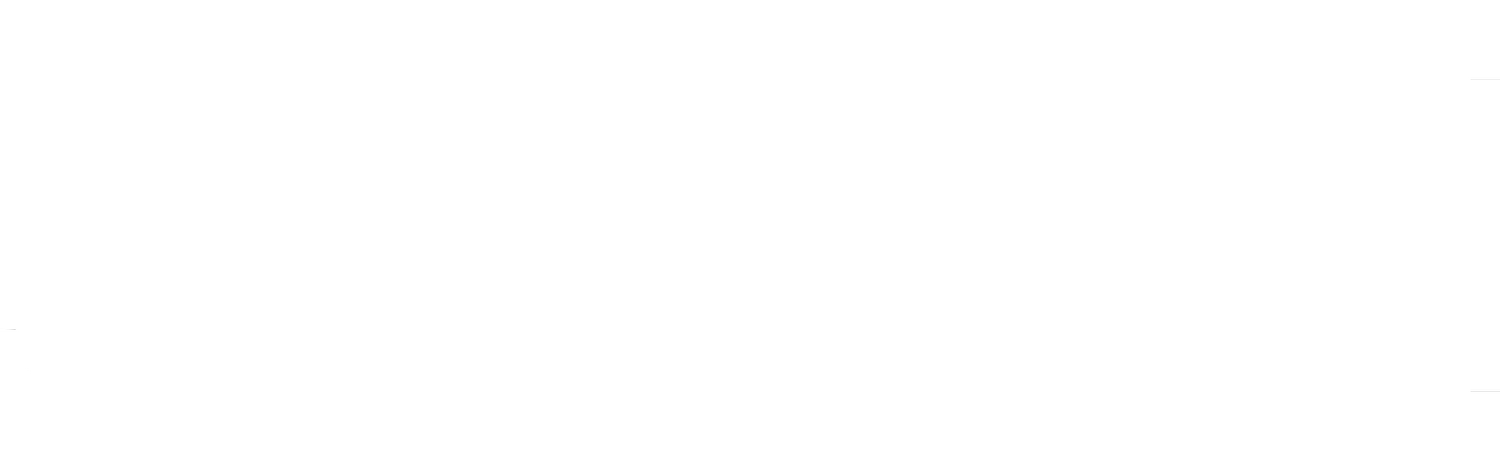

-Constructing Conditions of Design- This project address the challenge of navigating through a large number of 3D models on the web for architectural design. By collecting 52,000 3D models and using the Self-Organized Maps algorithm, an interactive interface was created to organize the models based on their formal properties. Students tested this tool and easily selected the desired objects for their designs. They focused on integrating the chosen models to create buildings with detailed and harmonized architectural articulations. Leveraging the abundance of available 3D models, this approach enables tailored and intricate design outcomes.

-City of Indexes- This research aims to assess the liking potential of a city or neighborhood without physically visiting it. Traditionally, urban quality assessment has relied on global city rankings or empirical and sociological approaches, limited by available information. However, this study takes a different approach by utilizing ML techniques and a vast dataset of geotagged satellite and perspective images from various urban cultures. The goal is to characterize personal preferences in urban spaces and predict a range of likable places for a specific individual.

AI for Social Good

______

AI for Social Good ______

-AI as a Walkability Assessment Tool- This review explores AI integration in walkability assessments from 2012 to 2022, analyzing 34 studies. It highlights how diverse datasets, especially street view images, improve model accuracy and pedestrian environment assessments. The shift toward ground-level data brings subjective insights into pedestrian experiences, but questions remain on addressing non-visual perceptions and diverse demographics. The study also identifies a research gap in using emerging technologies like VR/AR and digital twins, recommending further exploration to enhance walkability tools and human-city interaction understanding.

- A theoretical framework for integrating digital twins in building lifecycle management: This chapter presents a theoretical framework for implementing Digital Twins (DTs) in several stages of the building lifecycle, including design, construction, operation, and maintenance. It begins by exploring the function of Building Information Modeling (BIM) in design and construction, emphasizing the significance of incorporating data obtained from physical buildings through implementing the Internet of Things (IoT) across the building lifecycle.

-ML for HIV incidence prediction- Recent ML advancements in HIV prediction, typically using medical records, are extended to public health datasets for STIs in a high-risk U.S. county. Aimed at enhancing interventions, the study analyzes sociodemographic factors, STI cases, and SVI metrics. ML models achieve high accuracy in predicting HIV incidence for males and females, with key predictors including age, ethnicity, and STI history. While promising tailored interventions, further research is required to implement these models effectively in public health practices.

-From BIM to Digital Twins- In the College of Design, Construction, and Planning at the University of Florida, our research spans building information modeling (BIM), bi-directional data flow coordination, and machine learning (ML) for built environment applications. Collaborating with Unreal, Autodesk, Siemens, and NVIDIA, our Digital Twin project integrates these efforts to enhance data visualization, IoT technologies, and ML applications. The primary aim is to transition from BIM LOD 400 to Digital Twin (DT). Working on three buildings—the DCP Collaboratory, Disability Resource Center (DRC), and remodeling the Student Infirmary—alongside a preliminary DT deployment at Heavener Hall, insights gained will inform subsequent developments. The ultimate DT will integrate real-time information for informed decision-making in building lifecycle management, with a transferable and scalable protocol developed for broader application across the University of Florida.

-Developing a risk to flooding from qualitative and quantitative data- This disaster resilience project focuses on 30 Gulf of Mexico universities, using a dual-scale approach to automatically identify vulnerabilities in campus facilities through the street and aerial built environment imagery. Aerially, satellite images and GIS maps highlight flood-prone areas, while simultaneous street-view imagery is captured from corresponding locations. Data collection, utilizing the Open Street Map API, results in a dataset of 675,486 images. A web interface gathers qualitative feedback, integrating all data, and undergoes professional testing. The cluster-then-label method, employing AI algorithms, streamlines labeling. Preprocessed data trains a neural network to predict vulnerability, introducing a qualitative metric correlated with quantitative metrics from GIS maps.

-An AI unsupervized clustering of airport- When a disaster occurs, airports in the affected regions must quickly adapt to handle a surge in passengers and cargo, transitioning from regular operations to serving as humanitarian hubs. While efforts are being made to improve airport preparedness, existing initiatives often operate in isolation, limited by local experiences. This research aims to broaden the perspective by creating a global database of 971 airports, including their socio-technical characteristics in various data formats.

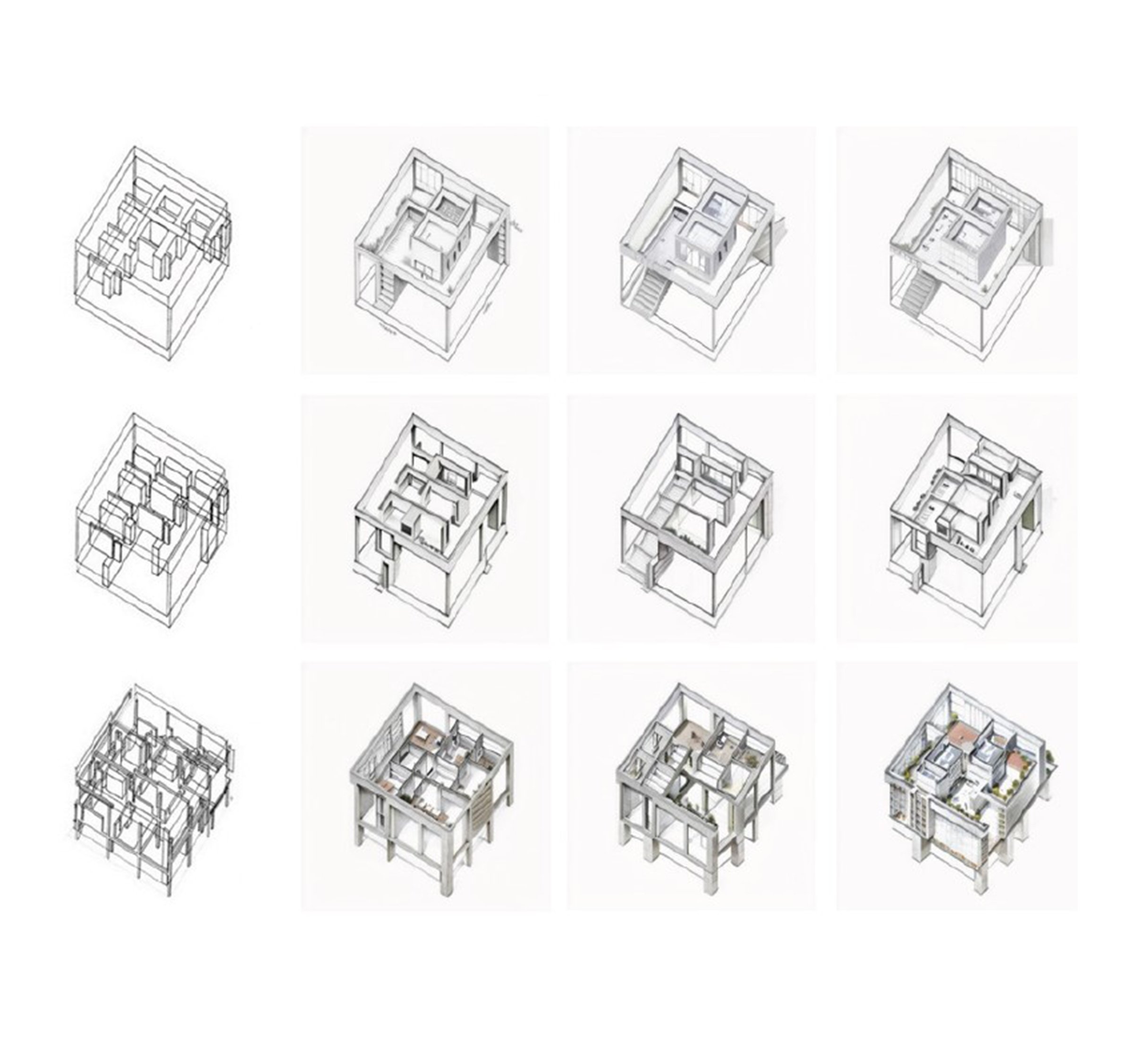

-Intelligent Transect Analysis- The study aimed to compare analogical transect analysis with an AI method for identifying urban indicators of residents' well-being. Key questions included scaling up place-based research using ML, classifying environmental features at urban/water intersections, and understanding AI's contribution to transect analysis and its connection to traditional knowledge. The study focused on three transects in Jacksonville's McCoy and Hogan creeks areas, exploring the potential of digitally generated site analysis and its integration with longstanding community knowledge for urban well-being assessments.

-Catalog of Change After Natural Disasters- This experiment focuses on encoding the changes that occur after a natural disaster using aerial images. A dataset of 50,000 aerial photos from 500 natural disaster epicenters was collected through a crawler. A Convolutional Neural Network algorithm was utilized to perform Semantic Segmentation and segment the built environment before and after the disaster. The changes were compared and transformed into numerical data, which were then organized using a self-organizing two-dimensional map (SOM). This catalog facilitates the prediction of possible changes in the built environment of new case studies.

-Machine Learning for Housing Needs- This paper proposes an ML workflow that combines multimodal data to quickly assess damages, shelter needs, and suitable locations for emergency shelters or settlements. The workflow utilizes open and online data for scalability and accessibility; fusing data from various sources using a decision-level fusion approach creates a common semantic space, enabling the prediction of individual variables. The model achieved a prediction accuracy of 62% by considering satellite images and geographic and demographic conditions.

-Green Plotter- Urbanization destroys green areas, prompting the need for eco-friendly policies. "GreenPlotter" is an AI-driven algorithm that integrates low-carbon design and carbon sequestration in trees for sustainable urban development. Using genetic algorithms, it optimizes land partitioning and road networks, proving successful in generating greener solutions.

-An AI Framework to Assess Food Security- This pipeline combines big data extracted from satellite images and Unmanned Aerial Vehicles (UAVs) with machine learning algorithms to address the rapid assessment, planning, exploitation, and management of agricultural resources, emphasizing food security. The pipeline involves automatic localization and classification of four types of fruit trees and semantic segmentation of road network using a supervised deep convolutional neural network. Furthermore, we proposed to optimize fruit harvesting based on factors like maximum time, path length, etc.

-Machine and Humans as Allies in Disaster Response- This research addresses the need to cross-validate academic studies and NGO protocols in disaster response. The experiment incorporates a large dataset of academic abstracts on disaster response and mission statements from humanitarian organizations. AI techniques, including word embedding and clustering algorithms, are used to analyze the data. Human intelligence is then applied for information selection and decision-making. The experiment’s outcome is a mockup that provides suggestions and tools tailored to specific disaster response scenarios, forecasting the potential involvement of architects in the process.

-Quantum Rails- To tackle this challenge, we utilize various datasets for the SBB rail network. This includes a geometric dataset representing the locations and IDs of Swiss train stations. Additionally, we utilize a yearly timetable from opentransportdata.ch, which allows us to calculate occupancy levels with hourly precision. This dataset is crucial for our strategy. Finally, we analyze and compare planned maintenance sites to obtain an "Index of Occupancy," which serves as a contextual measure for the spatio-temporal occupancy of operating segments.